Transaction data is deceptively simple.

On the surface, it looks like a list of debits and credits with dates and amounts. In practice, it is one of the hardest datasets to operate reliably in a fintech product. Transactions arrive late, change state, get duplicated, disappear, reappear, and occasionally contradict balances that were considered authoritative just hours earlier.

Most fintech teams discover this the hard way.

This case study describes how INSART approached Plaid Transactions ingestion for a production fintech platform, turning a fragile, incident-prone data flow into a stable, reconciled, and predictable subsystem that engineers could finally trust.

Client Context

The client was a fintech platform that relied heavily on transaction data to power core product functionality. Transactions were used for underwriting decisions, internal reporting, user-facing insights, and downstream analytics. Accuracy was not optional. Small inconsistencies had outsized consequences.

The company had real users, real volume, and real financial exposure. Transaction ingestion was no longer an internal detail. It had become a foundational dependency for business logic and decision-making.

Plaid was already integrated. Transactions were being pulled. On paper, the system worked. In reality, the data layer was unstable, and every new feature that relied on transactions increased operational risk.

Initial Situation

The symptoms appeared gradually, then all at once.

Duplicate transactions surfaced in user dashboards without a clear pattern. Balances derived from transactions did not always match balances fetched from accounts. Webhooks arrived out of order, triggering partial syncs that overwrote newer data. Engineers spent hours debugging issues that could not be reproduced reliably.

Retry logic existed, but it was inconsistent. Some failures retried aggressively and caused duplication. Others failed silently and left gaps in historical data. Backfills were risky, because no one could confidently predict what they would overwrite.

Most concerning was the lack of observability. When something went wrong, the team could see that data was wrong, but not how it became wrong. The system had lost its narrative.

This was no longer a “Plaid problem.” It was a data integrity problem.

Reframing the Problem

INSART began by reframing the challenge.

Transaction ingestion is not about fetching data. It is about maintaining a consistent, evolving view of financial reality over time. That requires explicit rules around ordering, identity, retries, and reconciliation.

The core shift was this: instead of asking “Did we fetch the transactions?”, the team needed to ask “Can we prove that the data we have is complete, ordered, and reconciled?”

Everything that followed was built around that question.

Core Technical Challenges

Once the system was examined as a whole, several root challenges became clear.

The transactions sync endpoint was being used, but without a strong understanding of its cursor-based semantics. Webhooks were processed eagerly, without guarantees about ordering or idempotency. Retry logic existed, but it was not coordinated with state, leading to partial replays and duplication.

There was no clear distinction between incremental syncs, backfills, and reconciliation passes. Balances were sometimes treated as authoritative, sometimes ignored, depending on the context. Debugging required reading logs across multiple services and mentally reconstructing event order.

The system worked often enough to be dangerous, but not consistently enough to trust.

Designing Transactions Ingestion as a Deterministic System

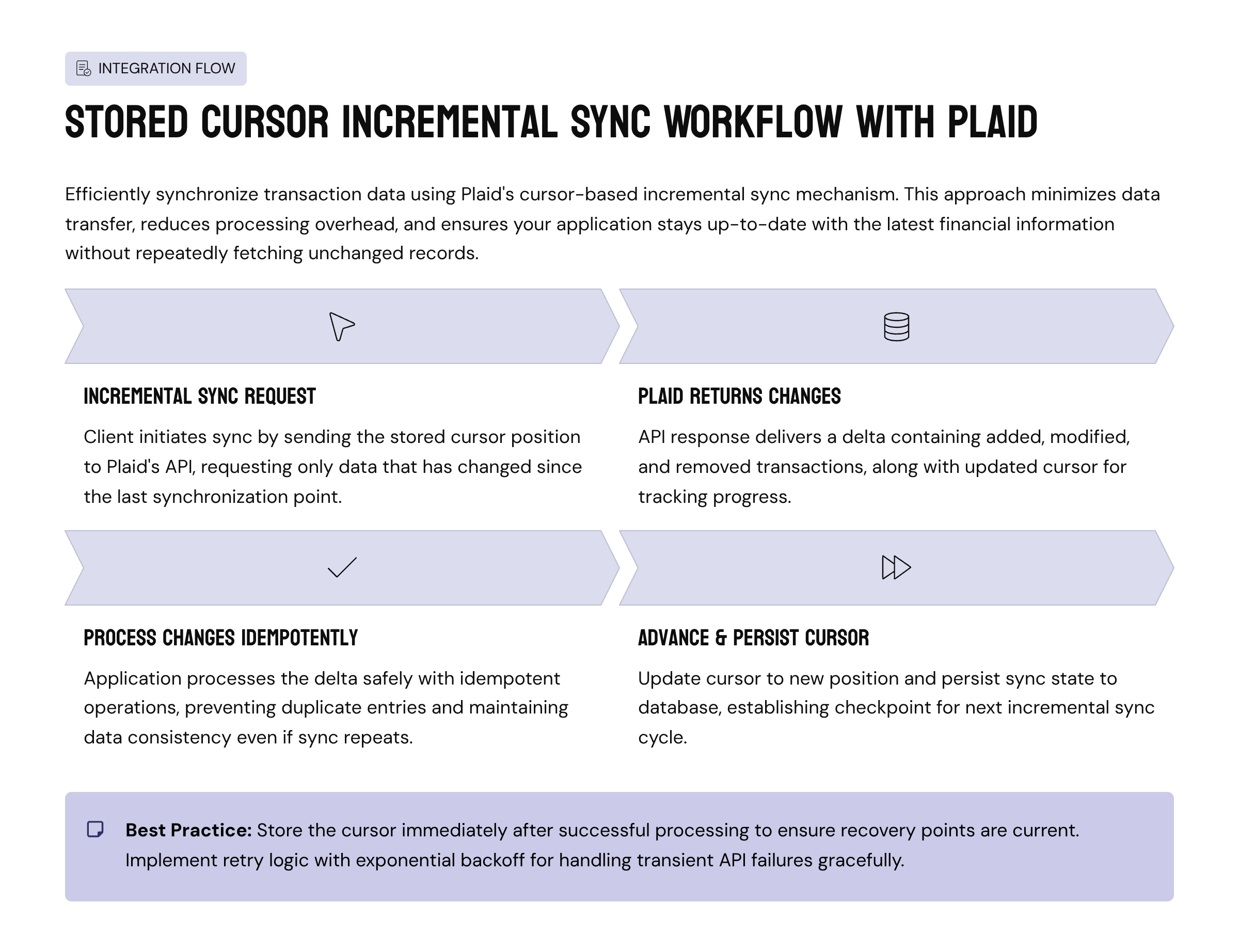

INSART approached the solution by treating transaction ingestion as a stateful, deterministic pipeline, not a background job.

The first step was to establish a single source of truth for sync state. Every Plaid item received its own transaction cursor, stored and advanced explicitly. No sync was allowed to proceed without a known starting point and a clear end condition.

Diagram: Transaction Sync Control Flow

Stored cursor

↓

Incremental sync request

↓

Plaid returns added / modified / removed

↓

Process changes idempotently

↓

Advance cursor

↓

Persist new sync state

This flow ensured that no data was skipped, replayed accidentally, or applied out of order.

Proper Use of Plaid Transactions Sync

INSART rebuilt transaction ingestion around Plaid’s incremental sync model instead of ad-hoc fetches. Each sync cycle processed three explicit categories: added, modified, and removed transactions.

Rather than assuming transactions were immutable, the system treated modification as a normal event. Updates were applied only if they advanced the known state of a transaction, and removals were handled explicitly rather than ignored.

Code Sample: Incremental Transactions Sync

async function syncTransactions(itemId: string) {

const cursor = await cursorStore.get(itemId);

const response = await plaidClient.transactionsSync({

access_token: await tokenStore.decrypt(itemId),

cursor

});

await transactionProcessor.process({

added: response.data.added,

modified: response.data.modified,

removed: response.data.removed,

itemId

});

await cursorStore.update(itemId, response.data.next_cursor);

}

The simplicity of the code masked the importance of the guarantees around it. Every execution was ordered, repeatable, and safe to retry.

Idempotency as a First-Class Requirement

Duplicate transactions were one of the most visible problems in the original system. INSART addressed this by making idempotency non-negotiable.

Each transaction was identified by a stable external identifier. Writes were structured so that applying the same event twice produced the same result as applying it once.

Code Sample: Idempotent Upsert

async function upsertTransaction(tx: PlaidTransaction, itemId: string) {

await db.transactions.upsert({

where: { externalId: tx.transaction_id },

update: {

amount: tx.amount,

date: tx.date,

pending: tx.pending,

metadata: tx

},

create: {

externalId: tx.transaction_id,

itemId,

amount: tx.amount,

date: tx.date,

pending: tx.pending,

metadata: tx

}

});

}

This approach allowed retries, replays, and backfills without fear of duplication.

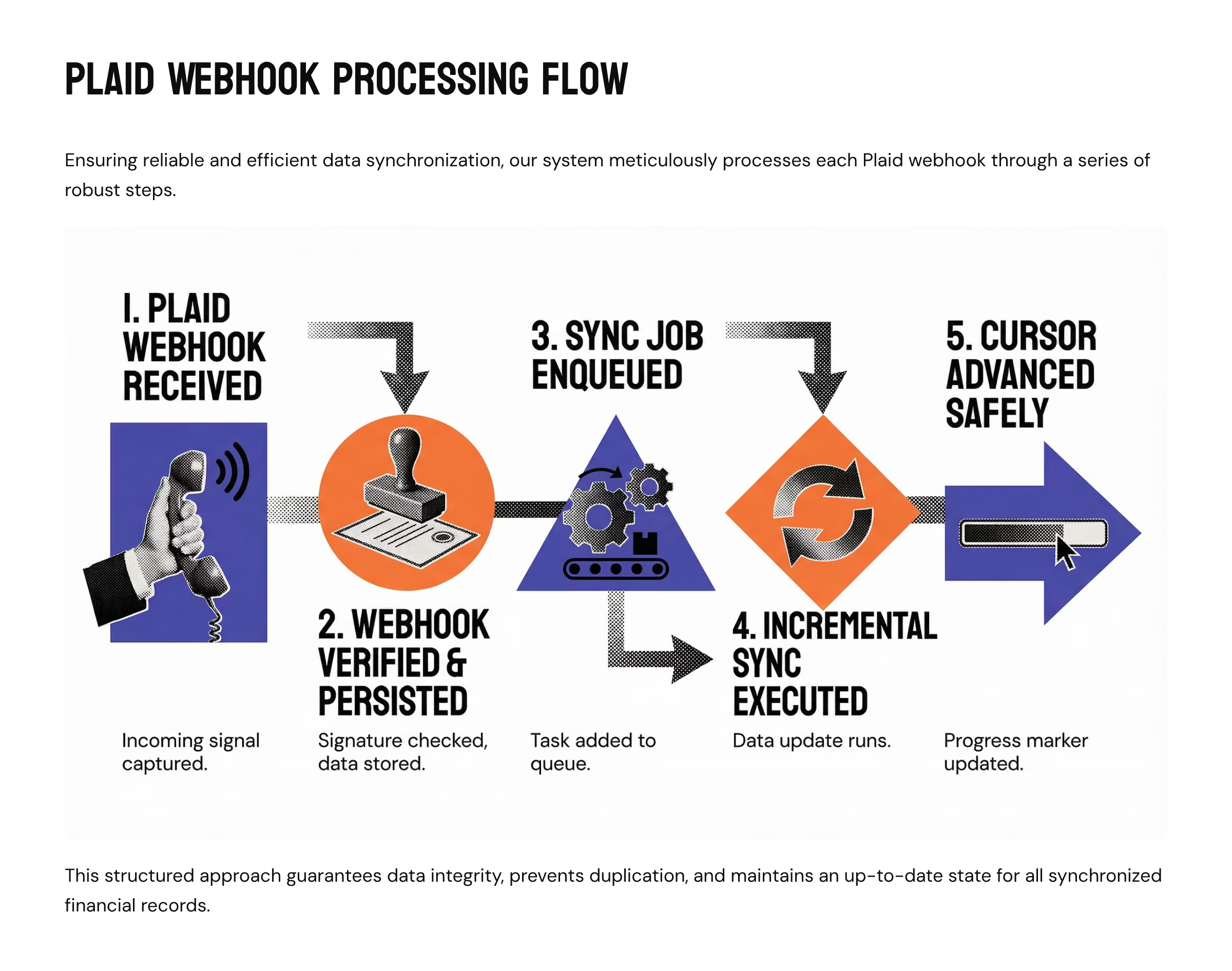

Webhooks Without Race Conditions

Webhooks were redesigned to act as sync triggers, not data carriers.

Instead of attempting to process transaction data directly from webhook payloads, INSART used webhooks to signal that new data might be available. The actual data fetch always occurred through the controlled sync pipeline.

Diagram: Webhook-Driven Sync Orchestration

Plaid webhook received

↓

Webhook verified & persisted

↓

Sync job enqueued

↓

Incremental sync executed

↓

Cursor advanced safely

This eliminated race conditions caused by out-of-order webhook delivery and ensured consistent behavior across environments.

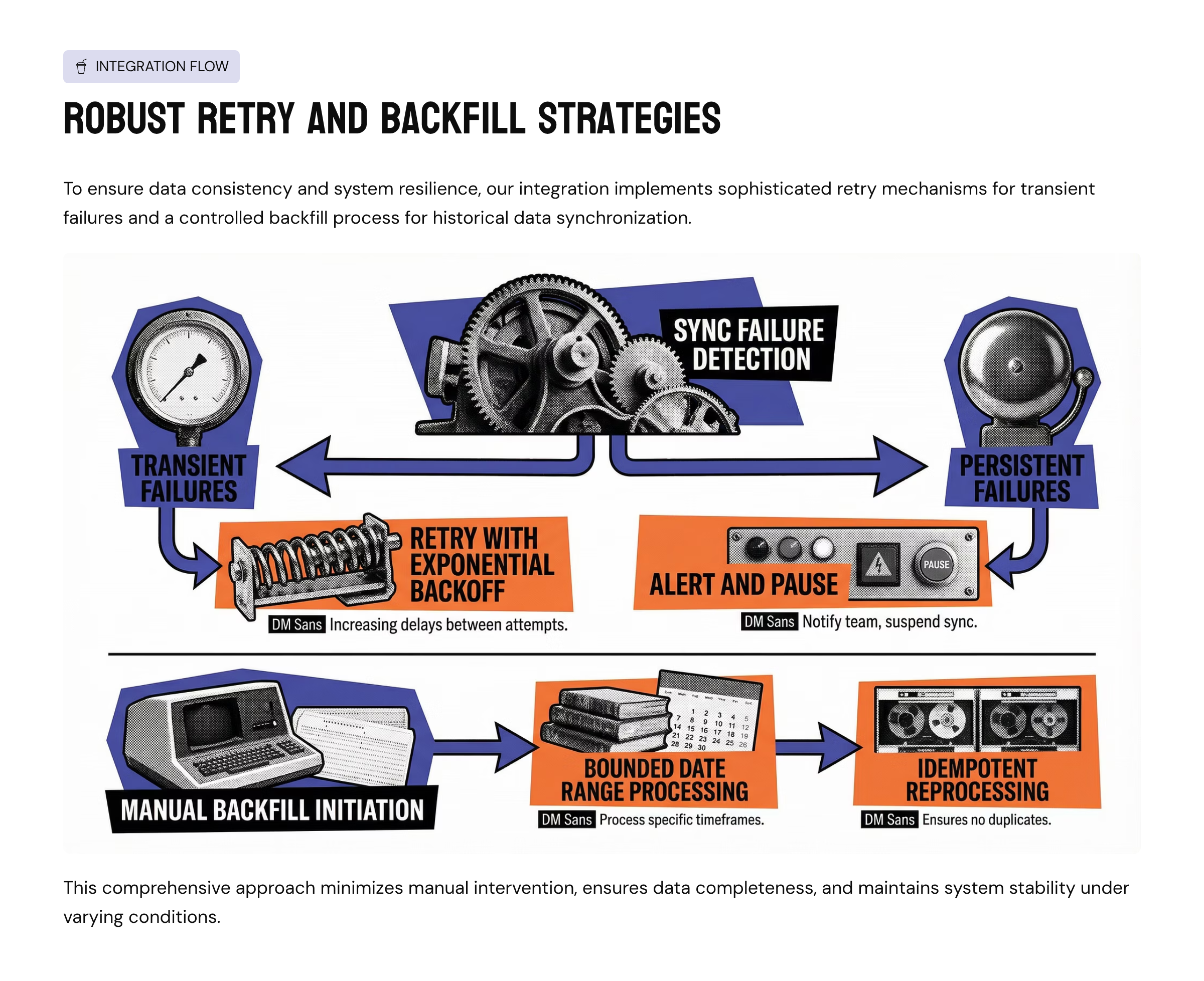

Retry Logic and Backfill Strategy

Retries were redesigned to be deliberate rather than automatic.

Transient failures triggered retries with backoff. Hard failures surfaced alerts and paused progression. Backfills were treated as explicit operations with clearly defined scope, never as side effects.

Diagram: Retry and Backfill Strategy

Plaid webhook received

↓

Webhook verified & persisted

↓

Sync job enqueued

↓

Incremental sync executed

↓

Cursor advanced safely

This made recovery predictable and safe.

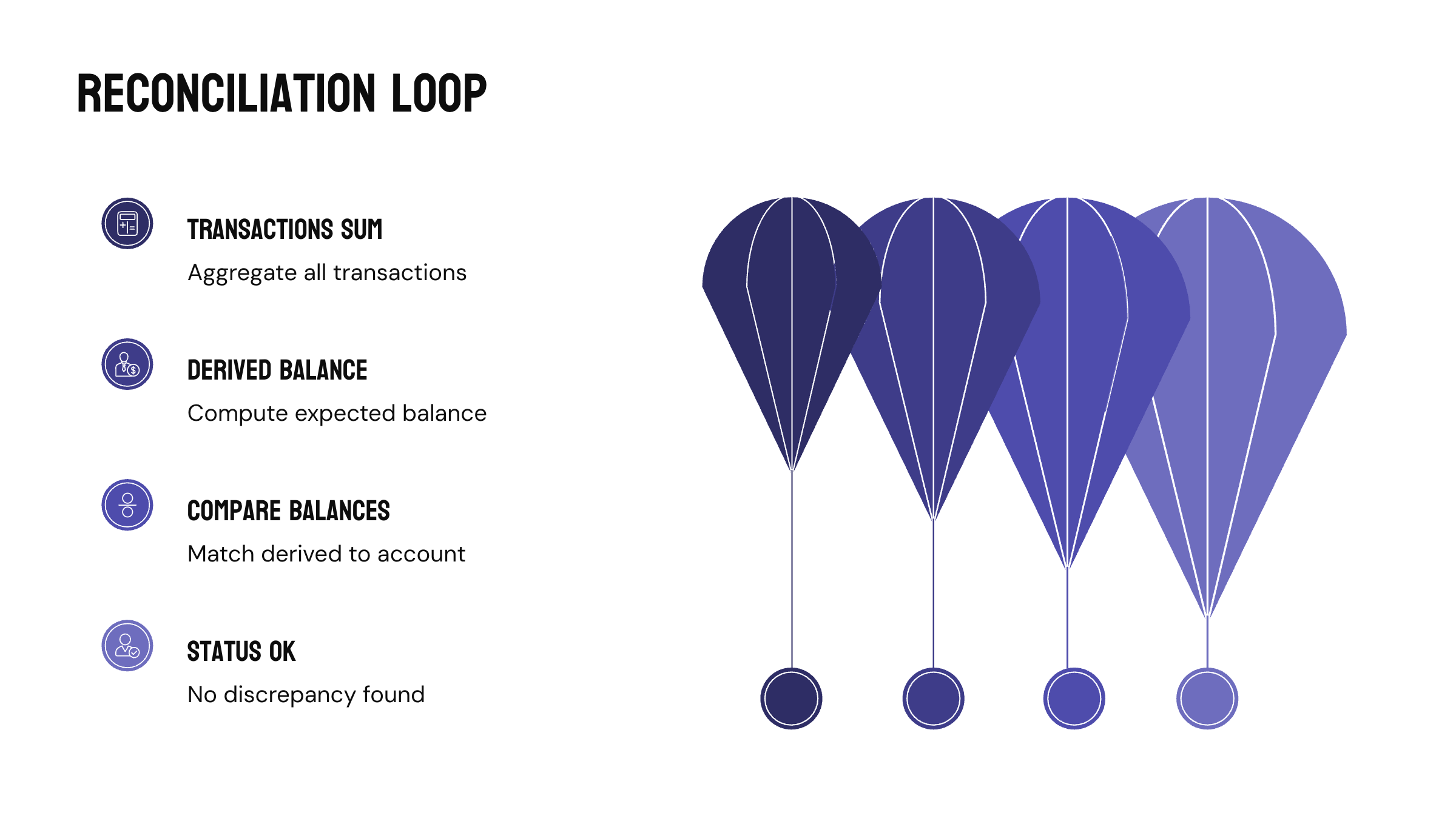

Reconciliation as an Explicit Phase

INSART introduced reconciliation as a formal step rather than an implicit hope.

Transaction-derived balances were periodically reconciled against account balances to detect drift. Discrepancies were logged, surfaced, and investigated, not silently ignored.

Diagram: Reconciliation Loop

Transactions sum

↓

Derived balance

↓

Compare with account balance

↓

Match → OK

Mismatch → investigation record

This provided confidence that the data layer reflected reality, not just successful API calls.

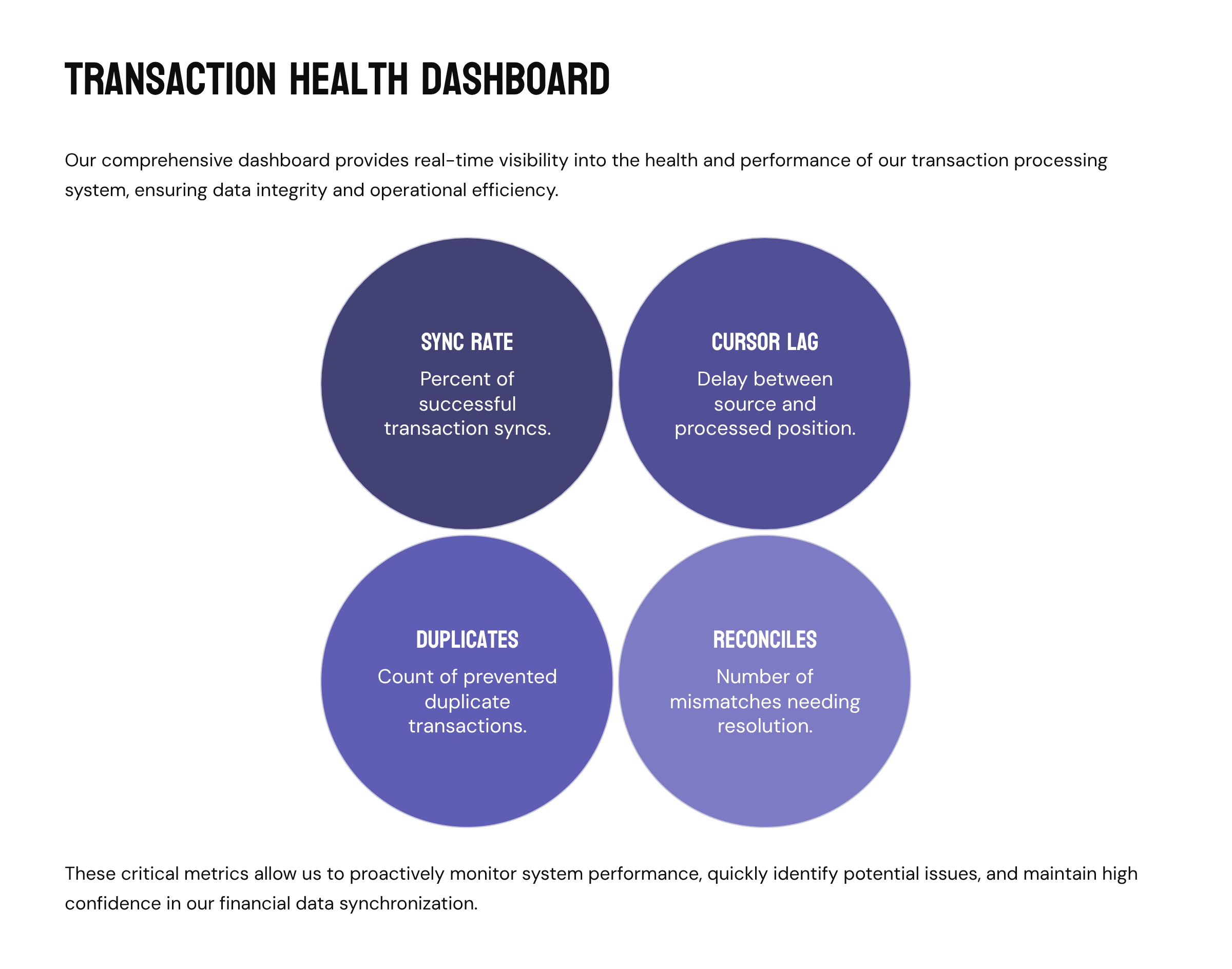

Observability and Operational Confidence

Finally, INSART introduced transaction-specific observability.

The team could now see sync latency, cursor lag, duplicate prevention metrics, reconciliation mismatches, and webhook processing delays. For the first time, transaction ingestion was visible as a system rather than a mystery.

Diagram: Transactions Health Dashboard

Sync success rate

Cursor lag

Duplicate prevention count

Reconciliation mismatches

Webhook-trigger latency

Operational confidence replaced guesswork.

Outcome

The impact was immediate and lasting.

Transaction ingestion stabilized. Duplicate transactions disappeared. Balances aligned consistently. Incidents dropped sharply after launch. Engineers stopped treating the data layer as fragile and began building confidently on top of it.

Most importantly, the platform gained something it had been missing: predictable financial truth.

Closing Thought

Transaction data is not difficult because it is complex. It is difficult because it evolves over time.

INSART’s approach acknowledges that reality and designs for it explicitly. By prioritizing data integrity, deterministic behavior, and operational clarity, transaction ingestion becomes infrastructure rather than a recurring source of risk.

This case study reflects how that transformation happens.